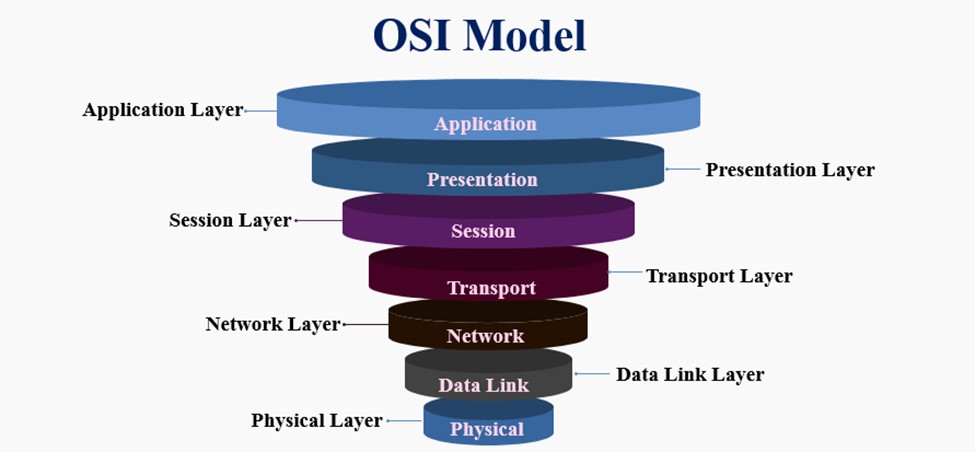

Hello aspiring Ethical Hackers. In this blogpost you will learn about the OSI model. The OSI (Open Systems Interconnection) Model is a theoretical framework for the design and implementation of computer networks. It was developed by the International Organization for Standardization (ISO) and is used as a reference for the design of communication protocols and communication interfaces. As an ethical hacker, you need to have a proper understanding about basic structure of networks and the protocols and frameworks guiding it. The importance of the OSI Model lies in its ability to provide a common language for the design and implementation of computer networks.

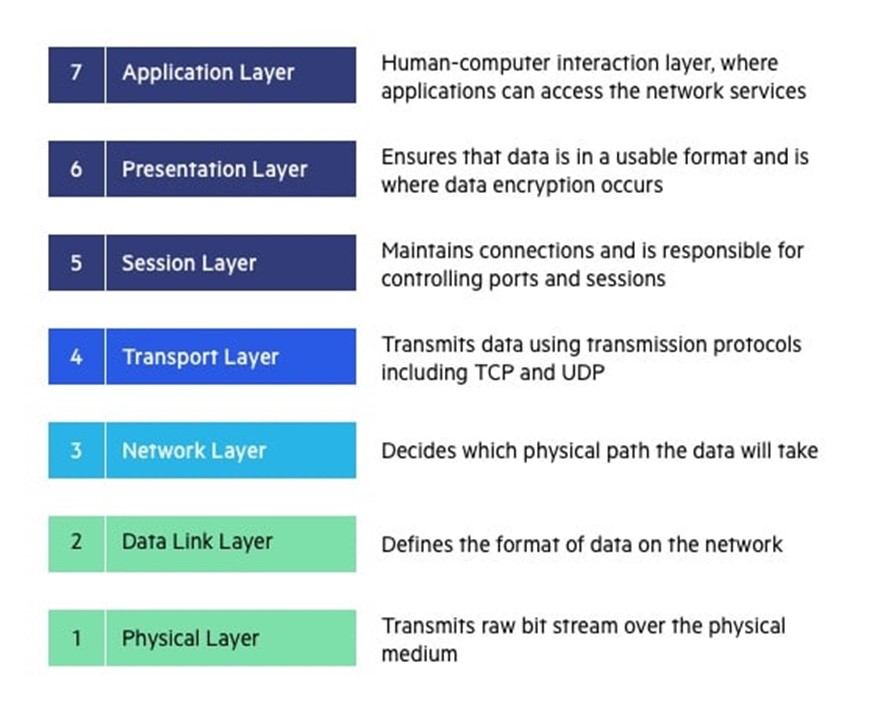

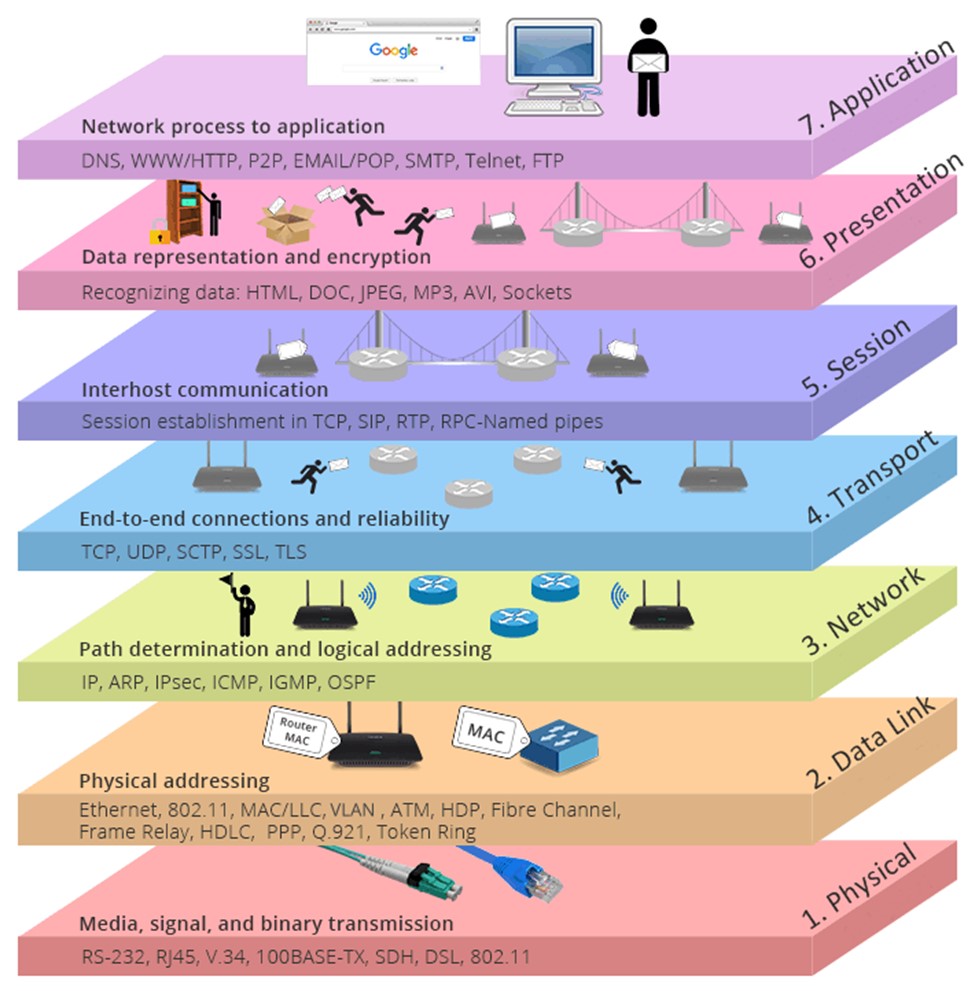

In OSI Model, the network is divided into seven layers. These layers from bottom to top are the Physical Layer, Data Link Layer, Network Layer, Transport Layer, Session Layer, Presentation Layer, and Application Layer. In this article, we will explore each of these layers in more detail.

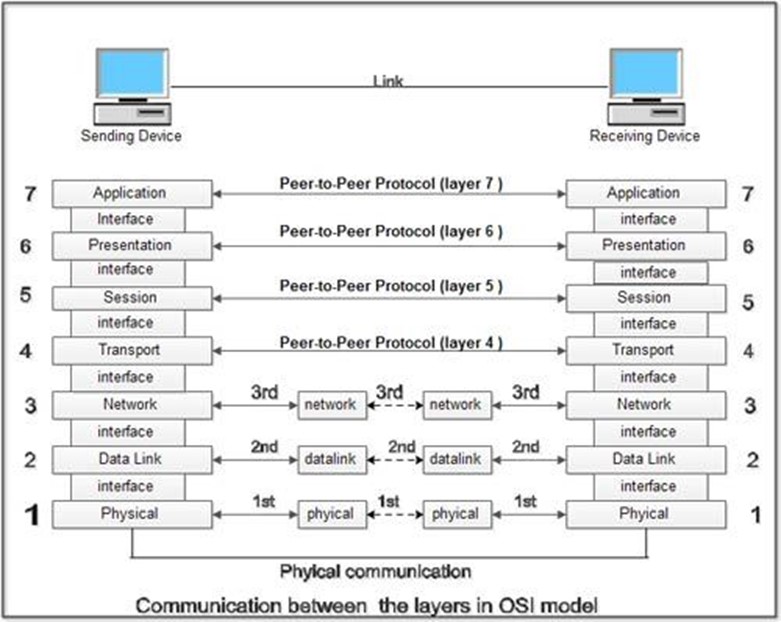

The OSI model outlines the process of transmitting information from a network device such as a router to its final destination via a physical medium and how the communication with the application is managed. In simpler terms, it establishes a standardized method of communication between various systems. It helps to ensure that communication between different computer systems is possible by breaking down the communication process into seven distinct layers, each with its own set of protocols and functions.

The seven layers of the OSI Model, from bottom to top, are the Physical Layer, Data Link Layer, Network Layer, Transport Layer, Session Layer, Presentation Layer, and Application Layer. In this article, we will explore each of these layers in more detail.

Layer 1: Physical Layer

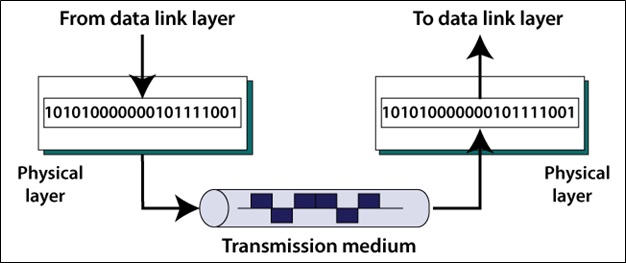

The Physical Layer is the first layer of the OSI Model and is concerned with the physical transmission of data between computers. It defines the electrical, mechanical, and functional specifications for the physical connection between devices.

The role of the Physical Layer in networking is to provide a stable and reliable connection between devices by specifying the electrical, mechanical, and functional requirements for data transmission. It also ensures that data is transmitted in a manner that is consistent with the data format defined in the other layers of the OSI Model.

The Physical Layer is responsible for several key functions, including:

- Establishing and maintaining a physical connection between devices

- Defining the electrical and mechanical specifications for data transmission

- Encoding and decoding data for transmission

- Defining the physical characteristics of the transmission medium

Some examples of Physical Layer technologies include Ethernet, Wi-Fi, and Bluetooth.

Layer 2: Data Link Layer

The Data Link Layer is the second layer of the OSI Model and is concerned with the delivery of data frames between computers. It provides error detection and correction functions and defines the format of the data frames that are transmitted between devices.

The role of the Data Link Layer in networking is to provide reliable data transmission by ensuring that data frames are delivered to the destination device in a timely and accurate manner. It also provides error detection and correction functions, which help to ensure the accuracy of the data that is transmitted.

The Data Link Layer is responsible for several key functions, including:

- Defining the format of the data frames that are transmitted between devices

- Error detection and correction

- Flow control and media access control

- Media-independent transmission of data frames

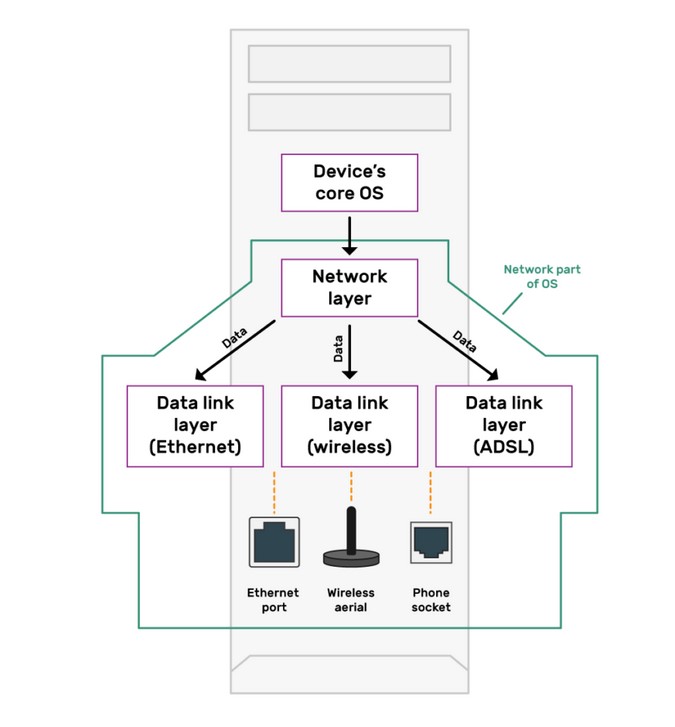

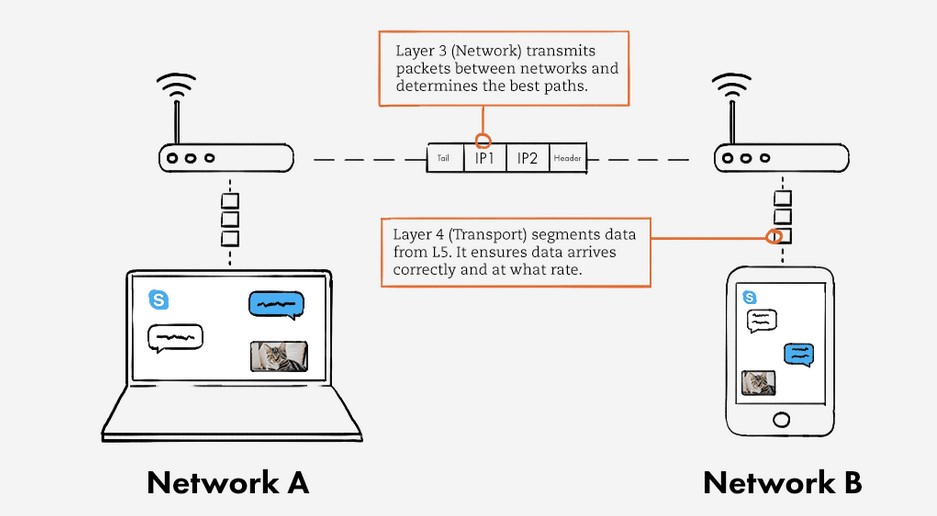

Layer 3: Network Layer

The Network Layer is the third layer of the OSI Model and is concerned with the routing of data between computer networks. It provides the means for transmitting data from one network to another and ensures that data is delivered to its intended destination.

The role of the Network Layer in networking is to provide an efficient and reliable means of transmitting data between computer networks. It also ensures that data is delivered to its intended destination by routing it through the network in an efficient and effective manner.

The Network Layer is responsible for several key functions, including:

- Routing data between computer networks

- Providing end-to-end connectivity between devices

- Encapsulating data for transmission between networks

- Ensuring the reliability and efficiency of data transmission

Some examples of Network Layer technologies include IP (Internet Protocol) and ICMP (Internet Control Message Protocol).

Layer 4: Transport Layer

The Transport Layer is the fourth layer of the OSI (Open Systems Interconnection) Model and is responsible for reliable data transfer between end systems. It is the layer that divides data into manageable segments and ensures that each segment reaches its destination without any errors or lost data.

The Transport Layer is critical to the functioning of a network as it ensures the reliability of data transmission. It does this by dividing data into segments, which are then transmitted and reassembled at the destination end. This layer also provides flow control, which prevents the sender from overwhelming the receiver, and error control, which detects and corrects any errors that may occur during transmission.

The Transport Layer performs several key functions, including:

- Segmentation: The Transport Layer divides data into segments for transmission.

- Flow Control: This function ensures that data is transmitted at a rate that the receiver can handle.

- Error Control: The Transport Layer checks for errors in the data and ensures that any errors are corrected.

- End-to-End Connectivity: The Transport Layer provides end-to-end connectivity between applications running on different end systems.

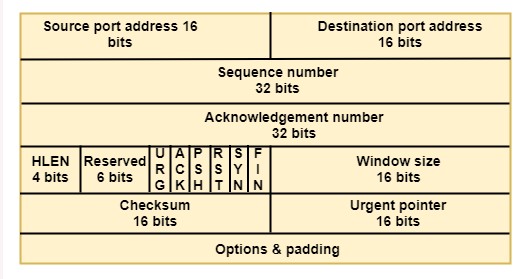

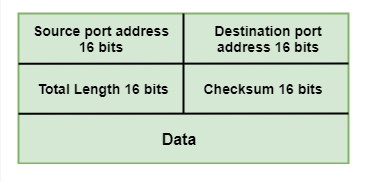

There are two main types of Transport Layer protocols:

- TCP (Transmission Control Protocol): This is a reliable, connection-oriented protocol that ensures that data is transmitted accurately and completely.

- UDP (User Datagram Protocol): This is an unreliable, connectionless protocol that does not guarantee the delivery or accuracy of data. It is used for applications that do not require reliable data transmission, such as video streaming.

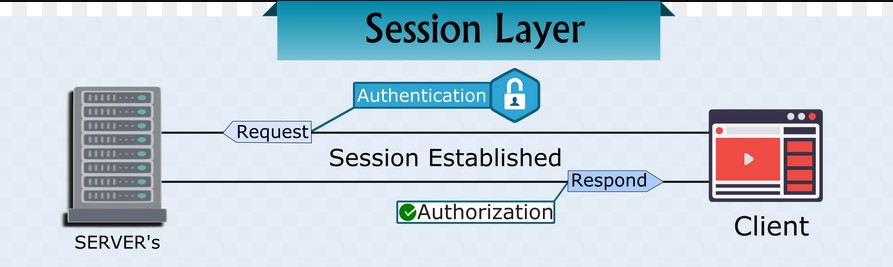

Layer 5: Session Layer

The Session Layer is the fifth layer of the OSI Model and is responsible for establishing, managing, and terminating communication sessions between applications. A session is a continuous exchange of information between two applications and can involve multiple data transfers.

The Session Layer provides a framework for applications to communicate with each other. It coordinates the communication process between the applications and ensures that the data is transmitted in an orderly and synchronized manner. The Session Layer also ensures that the communication between the applications is maintained until it is terminated by either the sender or the receiver.

The Session Layer performs several key functions, including:

- Session Establishment: The Session Layer establishes a communication session between two applications.

- Session Management: The Session Layer manages the communication session by maintaining the synchronization of data transfer.

- Session Termination: The Session Layer terminates the communication session when it is no longer needed.

There are several Session Layer protocols, including:

- NFS (Network File System): This is a popular protocol for sharing files over a network.

- RDP (Remote Desktop Protocol): This is a protocol for remote access to a desktop.

- SSH (Secure Shell): This is a protocol for secure remote access to a computer.

Layer 6: Presentation Layer

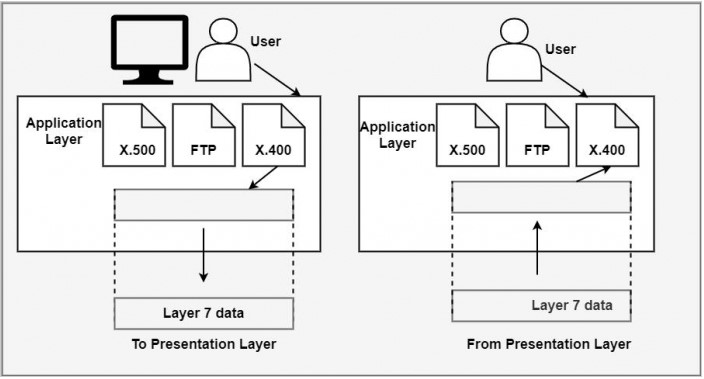

The Presentation Layer is the sixth layer of the OSI Model and is responsible for providing a common format for data exchange between applications. The Presentation Layer is responsible for converting data from the Application Layer into a standardized format that can be understood by both the sender and receiver.

The Presentation Layer is responsible for data representation and encryption/decryption of data. It ensures that the data transmitted between applications is in a standard format and can be understood by both the sender and receiver. The Presentation Layer also provides a means for data compression and decompression to reduce the amount of data transmitted over the network.

The Presentation Layer performs several key functions, including:

- Data Conversion: The Presentation Layer converts data from the Application Layer into a standard format that can be understood by both the sender and receiver.

- Data Compression/Decompression: The Presentation Layer can compress data to reduce its size for transmission over the network and decompress it for use by the recipient.

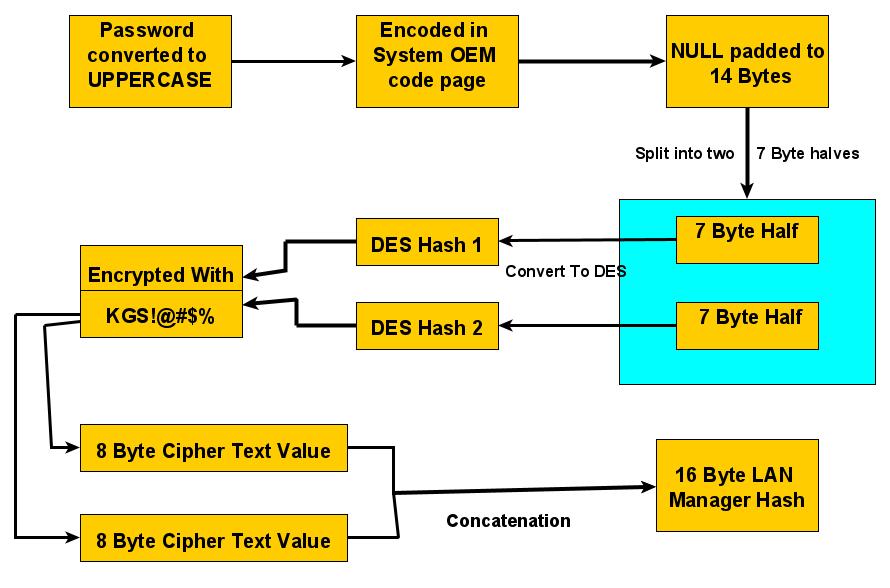

- Data Encryption/Decryption: The Presentation Layer can encrypt data for transmission over the network and decrypt it for use by the recipient.

There are several Presentation Layer protocols, including:

- MIME (Multipurpose Internet Mail Extensions): This is a protocol for the representation of multimedia content.

- SSL (Secure Sockets Layer) and TLS (Transport Layer Security): These are protocols for securing data transmission over the internet.

Layer 7: Application Layer

The Application Layer is the top layer of the OSI Model and is responsible for providing a user interface for network applications. It is the interface between the network and the user, allowing applications to request and receive network services.

The Application Layer is responsible for providing network services to applications. It is the interface between the network and the user, allowing applications to request and receive network services. The Application Layer provides a means for applications to interact with the network and access the services provided by the lower layers of the OSI Model.

The Application Layer performs several key functions, including:

- Network Services: The Application Layer provides network services to applications, including file transfer, email, and other network-based applications.

- User Interface: The Application Layer provides a user interface for network applications, allowing the user to interact with the network.

- Network Resource Access: The Application Layer provides a means for applications to access network resources, such as databases or file servers.

There are several Application Layer protocols, including:

- HTTP (Hypertext Transfer Protocol): This is the primary protocol used for web browsing and web application access.

- FTP (File Transfer Protocol): This is a protocol for transferring files between systems.

- SMTP (Simple Mail Transfer Protocol): This is a protocol for sending email.

In conclusion, the Application Layer is the top layer of the OSI Model and is responsible for providing network services to applications. Its functions of network services, user interface, and network resource access provide a means for applications to interact with the network and access the services provided by the lower layers of the OSI Model. The Application Layer is crucial for the operation of network-based applications and services.

The OSI Model in Real-World Networking

The OSI Model is widely used in real-world networking, as it provides a standardized framework for understanding and designing networks.

This model is used in a wide variety of applications, including:

1. Network Design:

The OSI Model is used as a reference for network design, helping network engineers to understand the various components and protocols involved in a network.

2. Network Troubleshooting:

The OSI Model provides a standardized framework for troubleshooting network problems, making it easier for network technicians to diagnose and resolve issues.

3. Network Optimization:

The OSI Model is used to optimize network performance by helping network engineers to identify bottlenecks and other performance issues.

4. Importance of understanding the OSI Model for network technicians:

Understanding the OSI Model is critical for network technicians, as it provides a standardized framework for network design and troubleshooting. Network technicians who understand the OSI Model are better equipped to diagnose and resolve network problems, as well as to design and optimize network performance.

Advantages of OSI Model

There are several advantages to using the OSI Model for network design and troubleshooting, including:

Standardization: The OSI Model provides a standardized framework for network design, making it easier for network engineers to understand the various components and protocols involved in a network.

Modularity: The OSI Model is modular in design, making it easier for network engineers to understand the different layers and protocols involved in a network.

Troubleshooting: The OSI Model provides a standardized framework for troubleshooting network problems, making it easier for network technicians to diagnose and resolve issues.

Understanding the OSI Model is essential for anyone working in the field of computer networking. This standardized framework provides a means of understanding and designing networks, as well as diagnosing and resolving network problems. Network technicians who understand the OSI Model are better equipped to optimize network performance and provide network services to users.

In conclusion, the OSI Model is a critical component of computer networking, providing a standardized framework for understanding and designing networks. Network technicians who understand the OSI Model are better equipped to diagnose and resolve network problems, as well as to design and optimize network performance.